Refactoring is Better with Metatables

I’ve been able to spend the last few days refactoring LINK and it has been going surprisingly well.

For quite awhile, I’ve wanted to modify LINK to update based on transactions, meaning any work done waits to be committed until the end of the frame instead of as soon as it is finished.

The basic idea is if all the critical data storage parts are done in one place, there’ll be fewer parts of the code to reason with, less I have to keep in my head which will result in a more stable program.

I’ll be the first to admit, I’ve been intimidated by this refactor and I find this fear is really in not knowing where to start.

LINK has been developed in a very cowboy coding style, which I honestly believe is a boon during initial development when the idea hasn’t fully matured quite yet.

However, this does have the downside of being a mess of spaghetti, which I am not a stranger to.

Like before, when I want to add a new feature, I pick a spot I think fits best and ram it in there. If there are any quirks, I’ll work around them or find a better place to put the new feature.

Point being, I set and fetch data from the “item” data structure anywhere I please which can have some interesting side effects.

Behind the Scenes

What I call an “item” is the name I originally gave the generic object that stores “notes”. The “note” itself is an array that stores the text line by line. However, when I added auto wrapping I created “note_raw” which simply stores the full text as a string. The “note” is now only used for rendering and interacting with the on-screen text.

Now, when talking about these “items” in a normal end-user blog post, I call them “notes” because that’s how a user interacting with LINK would see them. This is reinforced by the fact that internally, every object is stored as a text note with some magic applied behind the scenes.

If it sounds confusing, yes.

I only need to make the differentiation because this post is a bit more technical than most.

Suffice to say, programming is a messy job where you pick a generic term to describe your main character, let’s say “guy”, add another word to describe the type of file “guy” is stored in, we’ll choose “brush” because that’s what the program “guy” was drawn in calls them, and then it becomes an official part of the lore.

Okay, I got a little off topic here.

Lua Metatables to the Rescue

Since I am changing the item data wherever and whenever I please, I need to know where this is happening.

I’ve always theorized that I would be able to use metatables to assist in refactoring code but none of my Lua projects ever got big enough to necessitate such a round about way of accomplish it.

With the main file I add features to being over 5000 lines of relatively unorganized code it’s not as easy as I’d hope it would be to trace a variable’s lifetime through out a frame.

Granted, better tooling from a proper IDE would help here quite a bit. When I wrote “Ooze Factory” in Typescript, I really appreciated the ability to quickly refactor code.

But I’m in Lua land which has a severe lack of tooling. On the bright side, it is a very powerful and expressive language so I get by.

Tables and Metatables

For those of you unaware, tables are Lua’s complex data structures. They act as both arrays and hash maps which is powerful in itself, but where the real power comes is in the form of metatables.

If you’ve used an object oriented library in Lua, odds are it uses metatables in the background to make everything work out.

I originally wrote “Robots, Attack!” in Moonscript but after discovering I didn’t really care for the language, I ported it over to Lua.

In the process I created a simple OOP library and made heavy use of metatables to abstract the single inheritance style I used.

I’ve since moved away from pure OOP, but it has it’s place and metatables make it happen.

The short of it is, a metatable is a normal table that has a few specific keys you can set that Lua utilizes. The metatable can be assigned to any table that you want to exhibit its behavior.

The two keys, or metamethods, I’m going to talk about is __newindex

and __index which are used when setting and fetching variables

respectively. Both these metamethods can be assigned either a table or a

function.

When accessing a table, if the value doesn’t already exist it’ll turn to

the metatable. If __newindex or __index point to a table, that

table’s value will be set or returned. Likewise, if it points to a

function, the function will be called.

__newindex

Since my goal it to find out every place I set a value onto the item

table, let’s look at __newindex first.

For my purposes, I want to print out every time a variable is set. To do this, I could use this function.

__newindex = function(self, key, value)

local cl = debug.getinfo(2).currentline

log.item("@%d, setting %s = %s", cl, key, value)

rawset(self, key, value)

end

This is simple enough, but a little too simple.

Remember how I mentioned that it’ll only call the metatable functions if it doesn’t find the value in its own table?

Well, once it calls rawset (effectively self[key] = value but

metatables are ignored), that value will now be set and the metatable

function will never be called on that key again.

I forgot about this behavior and was chasing down bugs that didn’t exist so keep it in mind when working with metatables.

This is simple enough to fix, we just need to set the value to another table.

-- snip --

self._data[key] = value

end

Adding the _data table, will have its consequences.

First, we have to make sure we assign the _data variable a table of

its very own, but this is trivial enough to set during initialization. I

make use of a simple memory manager that takes care of this stuff

anyways.

Second, if you use the # operator to find the length of an “item”

array, it won’t work because “item” no longer contains any data.

If Lua 5.1 and LuaJIT (by default) supported the __len metamethod,

this would be an easy fix, but since they do not, it’ll be necessary to

go through the code and change any references to #item to be instead

#item._data.

You might notice that I removed the call to “rawset”. Because there is

no metatable on self._data, there’s no reason to bypass it. Also,

it’s a little faster because we’re not calling a function.

Realistically though, unless this code block is called many, many times in a tight loop, it’ll never be slow enough to matter. This is what we call pre-optimization and is generally a waste of time.

__index

As mentioned, with the __index metamethod set, if you request a

variable from a table that doesn’t exist, it’ll consult with either a

table or function set on __index.

Much like the __newindex function above, it’s fairly simple:

__index = function(self, key)

local value = self._data[key]

local cl = debug.getinfo(2).currentline

log.item("@%d, fetching %s -> %s", cl, key, value)

return value

end

This is great and all but not super useful. All this ends up doing is spamming the debug output with every access it makes in the frame.

What I really want to know is if I’ve accessed a variable after I’ve changed it because that could have some unforeseen consequences. I might rely on the fact that I changed the variable before it’s read.

Tracking Changes

I need a way to track whether or not a variable has been accessed after it’s been changed.

In my experience so far, access has only happened shortly after it was being set so I’ve been able to easily fix a lot of these issues by simply using temporary local variables (which are faster BTW, but like I said, it generally doesn’t matter).

In order to only report on the variables that are fetched after being set, I added an access table which simply records the key that was set and if that key is found when it is accessed, it’ll print out that it was fetched.

__newindex = function(self, key, value)

do

local access = self._access

local c = access.count + 1

access.count = c

access[c] = key

end

-- snip --

__index = function(self, key)

local value = self._data[key]

local access = self._access

for i = 1, access.count do

if access[i] == key then

local cl = debug.getinfo(2).currentline

log.item("@%d, fetching %s -> %s", cl, key, value)

break

end

end

return value

end

Now every time we access a variable after it has been set, it’ll log the access.

Of course, I’m only interested if a variable has been accessed after it’s been set within an update frame. To reset the access table, I just iterate through ever item and set the access count to zero:

for i = 1, wall.count do

local item = wall[i]

item._access.count = 0

end

Note: “wall” is what I call the data structure that stores all the notes.

Why Use an Array instead of a Hash?

In the process of writing this I realized I could have just done this…

-- in "__newindex"

self._access[key] = true

-- in "__index"

if self._access[key] then

--log.item...

end

…and it would have been much faster.

But this is debug code so once I go to ship this, I’m going to remove it.

Also, once I’ve successfully moved all setting actions to the transaction area I skip checking the variable access entirely so the only real penalty is adding a new access to the array (which is fairly fast anyways).

The big reason why I use an array is the bit of code that is always called, every frame:

for i = 1, wall.count do

local item = wall[i]

item._access.count = 0

end

I’d have to replace it with this:

for i = 1, wall.count do

local ia = wall[i]._access

for k, v in next, ia do

ia[k] = false

end

end

Iterating through a hash map with next or more commonly pairs is

very slow. Like really slow. So slow, in fact, I changed my entire

programming philosophy because of it. But that’s a post for another

time.

Whenever possible, I prefer arrays to hash maps when the data is constantly being cleared.

And iterating through an array is quite fast anyways so I always use them by default. If, and that’s a big if, if it ever becomes a problem, I deal with it then.

Success

With these changes, everything I do to the “item” tables will print out these log messages letting me know where I need to go in and make changes.

Well, mostly. Metamethods won’t be called for any changes made to any sub-tables which is why I avoid them when possible. The one sub-table I do use doesn’t have any affect on the note’s integrity and is always regenerated before use.

Luckily, a lot of the fetches occur because I used the table variable to store temporary changes. I just add local variables to the mix and queue the result at the very end.

Granted, there are some fetches that happen after I set values within the transaction handling code, but that’s fine.

My goal was to make sure that any permanent changes happened in one area so they can be more easily reasoned with and replayed for autosaving capabilities. All these changes are in response to the new information queued up to be processed.

To squelch such reporting, before the transaction starts I disable printing and enable it after the transaction is complete.

Overall, the refactor is moving along quite smoothly. So smoothly, in fact, I had time to write this post.

New Preview Build

Everything related to the storage of note data should now only be processed as a transaction.

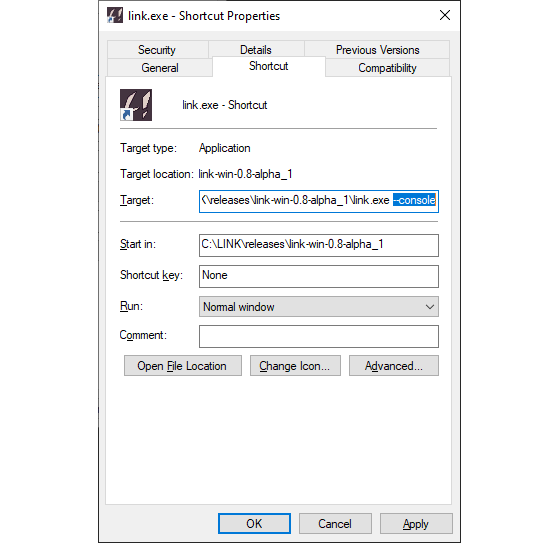

Not that this really means much right now but if you run LINK by adding

--console either from the command line or by creating a shortcut and

adding --console to the end of the target string like so:

…you’ll be able to see what’s being updated each frame.

If you’d like to see even more, in “game.cfg”, near the bottom there’s

an option DEBUG_QUEUE_DETAIL which can be changed from false to

true.

I’ve still got work to do before auto saving is possible but this is a great start!

I fixed a bug related to creating notes and having the cursor display incorrectly and select the first character typed, meaning it’ll be erased with the next character typed.

Another bug fixed was related to the command activation animation playing when it shouldn’t.

Both bugs were found because of the transition to the new system and I even found a few issues that were guaranteed to cause me problems in the future.

In any case, I was able to clean up a lot of code so I’m pretty pumped.

What’s Next

Auto saving is definitely a feature I’d like to get finished up but it’s not the most pressing matter.

I’d like to sort out the plugin API so I can move the commands, such as goto, image, and line to use this new API.

In particular, line is giving me the most problems because of how I want it to behave. Ideally I’d use multiple notes, linked together, to form a single line. The problem with that is there’s no good way to reference other notes without having that reference broken when notes are removed.

The current implementation uses one note but contains special logic to manage the other node. This has caused me numerous headaches and LINK still has the related issues to prove it.

At the very least, I’m hoping the plugin architecture will allow me to isolate the issues to a plugin file rather than having it sprawled out across the entire program.

That should keep me busy for awhile.

Files

Get LINK!

LINK!

Notes on an endless landscape

| Status | On hold |

| Category | Tool |

| Author | hovershrimp |

| Tags | Creative, Game Design, Management, Minimalist, planning, productivity |

More posts

- Finding the CoreMay 20, 2022

- Let's Get Wild at the LINK! JAMboreeMar 22, 2022

- Open Sourcing LINK! version 0.8 Alpha 1Jan 30, 2022

- Something Big is HappeningJan 04, 2022

- The Operation Was a Success!Dec 18, 2021

- Lift and Separate, User InputDec 10, 2021

- Images Were a MistakeNov 29, 2021

Leave a comment

Log in with itch.io to leave a comment.